This site will look much better in a browser that supports web standards, but is accessible to any browser or Internet device.

January 08, 2006

Accuracy and Precision

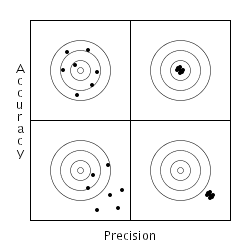

When I was getting my EE degree many years ago, one of my professors (Dr. Dave) had an interesting lecture on the difference between accuracy and precision. Even though many people use these terms interchangeably, they are separate concepts. Possibly the most important point of separating these two concepts is remembering that they may be independent. The following diagram shows the difference between accuracy and precision (and is taken from my memory of the lecture).

On this chart, accuracy increases as you move upward and precision increases as you move to the right. The upper right corner represents what most people think of when they are talking about either high accuracy or precision. The lower left corner represents what people think of as low accuracy or precision. Interestingly, most people don't think about the other two quadrants.

As programmers, we deal in words and concepts all of the time. There is almost nothing physical about most of what we do. This means that concepts are extremely important to our work. Subtle distinctions in concepts can mean the difference between a project that works and one that fails. In this case, differentiating between precision and accuracy can be quite useful. For example, if you are not accurate, it doesn't matter how precise you are (lower right corner). This is obvious when looking at the bullseye in the picture, but most of us have seen projects where someone tries to fix an accuracy problem by changing from float to double to get more decimal places. Changing data types may change the outcome due to a change in dynamic range, but it usually is not enough to just change the number of significant digits in the output.

Likewise, if an experiment is not giving the right answer, increasing the number of measurements may not change the outcome. More data to average increases the precision, but does not change the accuracy of the answer. Understanding these differences can mean the difference between wasting a lot of time generating a very precise, wrong answer and rethinking an approach to increase the accuracy of the answer.

Amusingly enough, we have all usually run into cases like the upper left corner. We know we have data in the right general area (accurate), but any individual point may vary due to some kind of noise. By averaging a large number of points we increase the precision without affecting the accuracy. Although most of us have a good feel for the mechanics of averaging, making the distinction between precision and accuracy simplifies the discussion of what we are doing. Which leads us to an important observation, simple post-processing of data can increase the precision of an answer, but it rarely changes the accuracy.

Throughout our field, concepts like these mean the difference between making progress and wasting time. Sometimes the differences between concepts are subtle, but that does not make them less important.

Update: Several people have asked about this essay and the diagram above. In the interest of giving full credit where credit is due. The professor mentioned is Dr. David Shattuck, who was at the time an Electrical Engineering professor at the University of Houston.

Posted by GWade at January 8, 2006 07:50 PM. Email comments